The problem

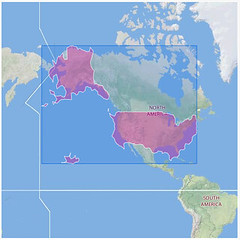

There is a growing number of point data, and an increasing need to perform cross referencing of the GBIF data with external sources, in this case the protected areas of the world.

The last 3 years of working on reasonably large databases (>100G) has told me that this join was not going to be an easy one, despite the obvious partitioning strategy that can be invoked here (spatial partitioning).

Therefore Javi and I set about this task by

- Using PostGIS and the traditional approach of joining 2 tables of 150M point records with 120,000 polygon records - finding will be posted shortly

- Using Hadoop and the Amazon EC2 to process the join

This is ongoing but I thought I'd post the findings so far.

Strategy 1:

Partition by cell

Start by splitting the 150M records from one file into a file per 1 degree cell.

Loop over each polygon:

Start by splitting the 150M records from one file into a file per 1 degree cell.

Loop over each polygon:

- Determine 1 degree cells covered polygon

- Use the input files as a merge input to hadoop and pass in the Polygon for the Map to do the contains()

Result

Hadoop will not handle so many file splits no matter what configuration I try so far. Mailing list responses suggest this is not the ideal use of Hadoop and it prefers single large files, rather than many smaller ones.

Amendment

Using 10 degree cells, it does work but takes a LONG time... so long I killed it. This was expected since it is really the equivalent of only a DB join with an index on an INTEGER teg_deg column.

Strategy 2:

Strategy 2:

1-Degree in-memory index of polygons

So this approach goes through the join the opposite way. The polygons are loaded into memory and a

So this approach goes through the join the opposite way. The polygons are loaded into memory and a

HashMap<Integer, Collection<Polygon>>for each Map operation to use.

The logic is effectively give me polygons intersecting the 1 degree square for this point, and then test them properly. This worked ok, but requires a LOT of memory for the polygons to be held.

Result

About 6 hours to process 60,000 polygons.

Strategy 3:

Strategy 3:

RTree index of polygons

Very similar to strategy 2, this approach indexes all the polygons by RTree. Thus for each point, the index provides only candidate polygons whose bounding box contains the point, and the number of candidate polygons to test is far smaller than that of strategy 2.

Very similar to strategy 2, this approach indexes all the polygons by RTree. Thus for each point, the index provides only candidate polygons whose bounding box contains the point, and the number of candidate polygons to test is far smaller than that of strategy 2.

Result

45 minutes for a 130M x 30,000 cross reference but requires the larger EC2 instance sizes. The RTree index means it will need processed in batches.

Hadoop requires tuning, just like a database does for large joins. Here is the final tuning for strategy 3:

Thanks to the Hadoop-core Users mailing list for their insights, and Andrea of OpenGEO for pointing me at the JTS RTree implementation

Hadoop requires tuning, just like a database does for large joins. Here is the final tuning for strategy 3:

- mapred.child.java.opts=-Xmx1G // for the R-Tree index

- mapred.output.compress=false // for easy reading of output

- mapred.tasktracker.map.tasks.maximum=4 // we have 7G per node, so 4G for 4 Map tasks is ok

- mapred.job.reuse.jvm.num.tasks=-1 // Reuse the Map as it has the index built (takes 90 seconds for startup)

- dfs.block.size=134217728 // use larger blocks - in retropsect this was a probable mistake and will be removed for next test

More to follow...